Nov 2008

Lucky to have (access to) a cluster? Here some notes on how to do geospatial number crunching on it a.k.a. HPC (High Performance Computing).

Preparing the disks

We decided to use the ext3 file system. An initial problem was the formatting of the RAID5 disk set since it exceeded the file system specifications. Then, setting the ext3 block size to 4k instead of 1k we could format it.

Storage: a home for GIS data

The disks are available via NFS to all nodes (blades in our case). All raw/original data sets and the GRASS database are sitting in an NFS exported directory which I even link on my laptop to easily add/access/modify stuff.

Front-end machine and blades configuration

The cluster is a (currently) 56CPU blades system, we’ll expand to 128 CPUs later this year (16 blades with 2 procs a 4 core and 16GB RAM per blade). Additionally, we have a front-end machine to run the job manager and to link in further disks, all via NFS.

The blades are configured diskless, i.e. that once started, they receive their operating system from the front end machine via network (10GB/s ethernet). Like this, we have a single directory on the front end which contains all software, this is then propagated to all blades. Very convenient. We use Scientific Linux (the LiveDVD copied onto the disk, there is a special directory to store your modifications which are then merged in on the fly once you boot the blades, pretty cool concept). The job software is (SUN) Grid Engine, also free software. Job control with GRASS I have described here:

https://grass.osgeo.org/wiki/Parallel_GRASS_jobs

-> Grid Engine

GRASS: Avoiding replicated import of large data files through virtual linking

New in GRASS 6.4 is that you can just register a geodata file on the fly with r.external. Altogether I have 1.4TB of new GIS data from our province, naturally I didn’t want to by a new disk array just for my provincial GRASS location! Here r.external comes handy to minimize the “import” to a few bytes. As expected, it leverages GDAL to get data into GRASS, the overhead is minimal.

Power consumption

Power consumption is measured, too: The entire system consumes around 2000W (each blade less than 200W), so it’s going into the direction of “green” computing (there is no such thing!). If we had a solar panel at least…

Outcome

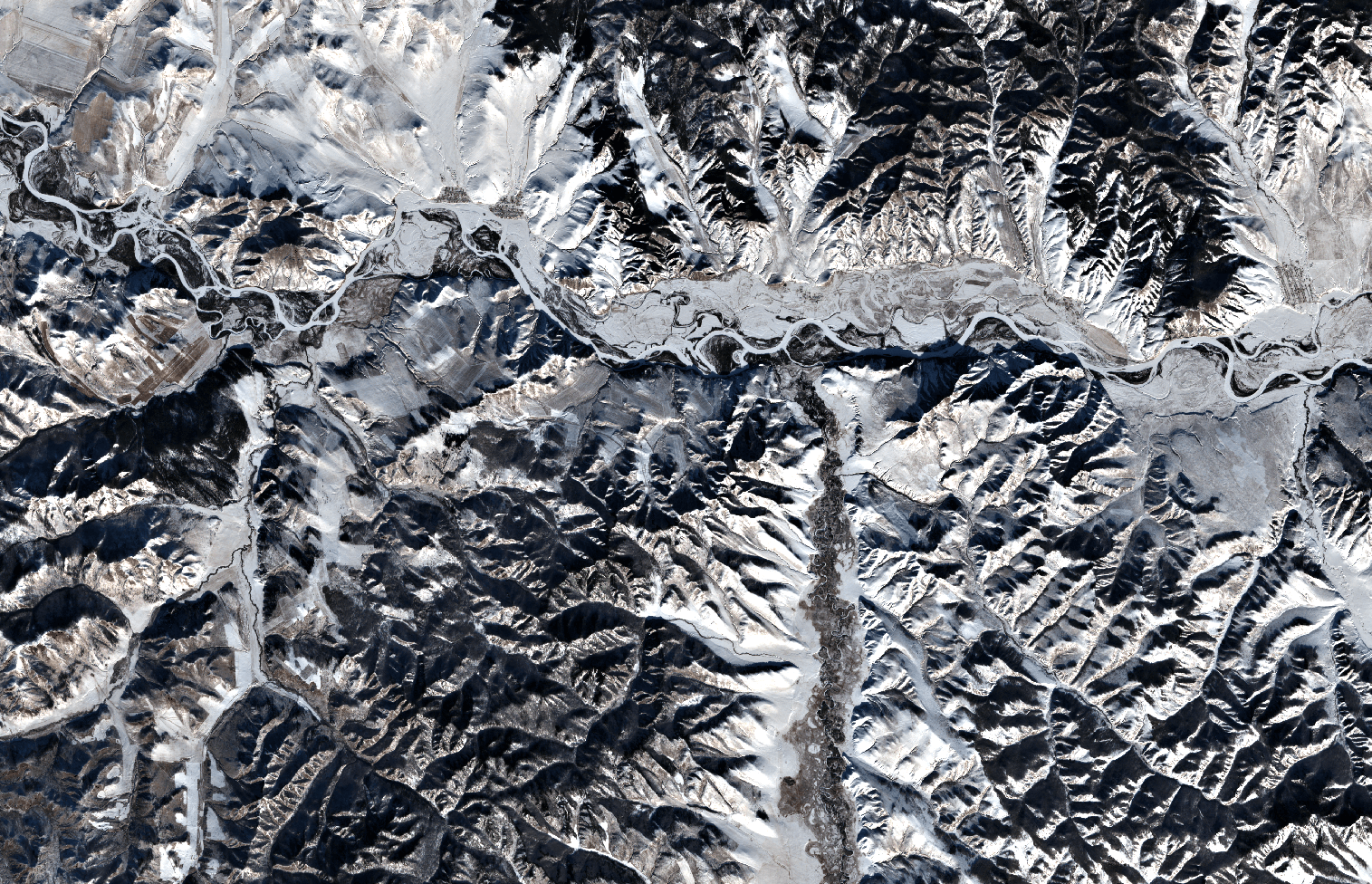

All in all a very nice solution. I made a stress test and removed all internal switches and shut down the blades while I was processing 8000 MODIS satellite maps. Everything survived and the Grid engine job manager collected the crashed jobs and restarted them without complaining. All resulting maps are collected in the target GRASS mapset and could be even exported to common GIS formats, if needed.

If you want to run Web Processing Services (e.g., pyWPS), you can likewise send each session to a node, giving you enormous possibilities for your customers.

Edit 2014:

“Big data” challenges on a cluster – limits and our solutions to overcome them:

- 2008: internal 10Gb network connection way too slow…

- Solution: TCP jumbo frames enabled (MTU > 8000) to speed up the internal NFS transfer

- 2009: hitting an ext3 filesystem limitation (not more than 32k subdirectories allowed but having more files in cell_misc/ as each GRASS GIS raster map consists of multiple files)

- Solution: adopting XFS filesystem [yikes! …. all to be reformated, i.e. some terabyes had to be “parked” temporarily]

- 2012: Free inodes on XFS exceeded

- Solution: Update XFS version [err, reformat everything again]

- 2013: I/O saturation in NFS connection between chassis and blades

- Solution: reduction to one job per blade (queue management), 21 blades * 2.5 billion input pixels + 415 million output pixels

- 2014: GlusterFS saturation

- Solution: Buy and use a new 48 port switch, ti implement 8-channel trunking (= 8 Gb/s)

Follow

Follow

Follow

Follow